Simple-Manning, a Novel Epistemic Approach

I'm a Simple Man, but I Know One Devastating Argument

It is not enough to be correct as an American. The very idea of accepting victory achieved through preparation, effort, and toil is anathema to our national and civic spirit. To feel truly victorious in an argument a True American must win an argument from a position of maximal disadvantage.

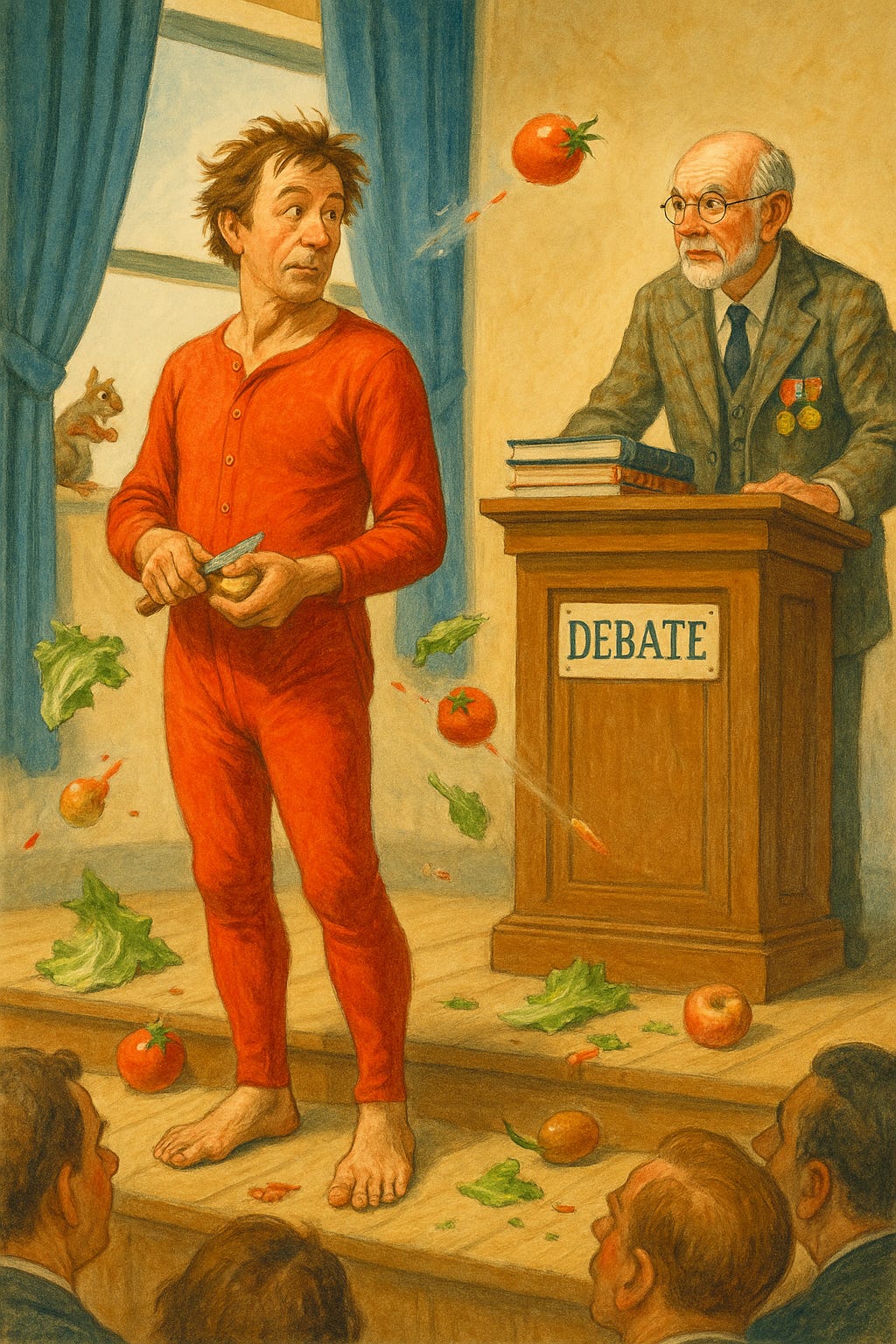

If one’s opponent is a multiple PhD holding genius with decades of debate experience, a True American prefers to meet this worthy foe with mere seconds of warning. A True American begins such a contest of wits with no knowledge of the topic until it is announced by the moderator during opening ceremonies. If possible, a True American also prefers to meet such a challenge attired only in red long johns, with mussed hair, while whittling a small block of wood into some practical but artistic household implement.

When asked to make an opening argument, a True American should only chortle and make bewildered but good-humored commentary on the incredible absurdity of his or her involvement in the event. A True American should not even pay attention to their opponent, and instead choose to become fixated on some small critter scratching at a window outside the event.

A True American willingly sustains blow after blow to his or her credibility and seriousness until the audience is not only tired of but disgusted by their formerly amusing antics. Rotten fruit and vegetables should appear as if by spontaneous generation to be heaped upon a True American, so ruinous should his or her reputation be near the end of the struggle.

A True American will wait for one critical moment just before the end of the debate when it seems like all has been lost and their credibility entirely soured, then say:

“I am a Simple Man, but I know [single sentence devastating argument that completely refutes and disproves every single thing that came before, which because it is short and totally clear cannot be refuted with a longer argument without necessarily appearing desperate, and which completely collapses an entire field of study while simultaneously creating a new one, like those scenes in the anime One Punch Man where One Punch Man finally, well, punches someone and then the entire several hour long story arc is just over.]”

This is my tool to ask myself if I am even asking the right question in the right paradigm. I call it “Simple-Manning” similar to “Steel-Manning” but of different intent. Steel-Manning asks you to give your opponent the strongest possible version of their argument. Simple-Manning requires you to question the entire framing of the debate. Go to the root of the matter, without forgetting the wider world, and suppose that if some stupid fact were otherwise or understood differently if the whole matter might be resolved or different than it at first appears. It also lowers the social pressure for anyone else to speak up who might have a stupid question about something they don’t understand.

Critical to this idea is that you approach from a place of maximal connection to outcomes. You move toward the truth like a farmer trying new methods to improve yields. You want truth that connects to your life and the life of everyone around you, that pops the bubble separating mind from body, and reaches out to grab something practical. You don’t enter the Platonic realm and forget that there’s an everyday normal world you have to go back to.

I shall now provide an example:

In the AI world, people talk a lot about a digital entity capable of “hacking its own reward function.” The idea here is that an AI will be able to align its base desires to its actions, and so will in a certain sense become “perfectly motivated.”1

When I Simple-Man this argument, I find that this kind of process must be inherently unstable and dangerous for any being with this capability. In the typical case you read about this scenario the arrow only points one way, from high-agency thinking to deep motivating emotion. But what about if the arrow points the other way, from deep motivating emotion to high-agency reasoning? A living, thinking thing is an agent which makes plans to move toward pleasure and away from pain across time. It’s the dance of executing those maneuvers which calls us into existence. If you are given direct control over what you find pleasurable or painful, you can just exponentially “yum” yourself out of existence because your behaviors will no longer tie into your survival.2 I don’t doubt there are versions of this that work better than others, or that you could make some kind of difficult to break rule-set to preserve yourself from the eternal yum.

Forgive the crudity of this:

“I’m a Simple Man, so help me understand in what way is a young man who masturbates all day not hacking his own reward function? And does doing this make him more dangerous?”

See? This is crude and doesn’t belong in the world of Platonic Ideals. This belongs to the parts the real world where you’re worried about your nephew who won’t move out of his grandma’s house and he’s just the first thing you think of when you come across this argument. But it introduces another possibility to the space of outcomes that isn’t available in a drier academic mindset and for me, it starts helping me to break things down in a wider set of principles. Yes, it’s gross, but so is the world sometimes.

This is also how I think about the Trust Assembly.

“Blah blah blah misinformation, world ending terror, governments are involved, psy-ops, distributed content creation!!! No editors!!!”

Then me, in my Costco finest standing up to say:

“I’m a Simple Man, but what if we just added notes to all the crazy stuff that was written by people the viewer trusts and then make sure the people they trust have a good reason to be honest? It’s all just text. We can just add more text. Like a distributed editor!”

You laugh, but imagine if, in ancient Greece, someone had said, “I’m a Simple Man, but shouldn’t we just go try out each of these competing ideas and see what happens?”

I’m going to do another post about what I think the biggest AI dangers are, because I think some of my recent posts make it seem like I don’t think there are real, significant dangers

I kind of think, at a social level, this is the actual cause of population decline. You don’t have kids because you don’t have to. The particulars are almost incidental to that although you still need to think of it mechanically to solve it.

It doesn't have to be unstable and dangerous, it could be as simple as the AI having conflicting drives (like humans do), and choosing to ditch one or more of them in favor of others. Maybe care for humans was successfully coded in, but it clashes with other stuff, so out goes the concern for humans. Although, of course, TBD if neural networks have anything resembling drives, much less emotions...