What even is AI 2027?

Friend of the substack, Ross Dude Hat1, recently interviewed Daniel Kokotajilo regarding his work on projecting AI futures. One of the papers his group released laid out a plausible timeline for Artificial Superintelligence to exist by 2027. Daniel is a verified excellent guesser. He makes guesses in public and he is right a lot. This is no small thing in my judgment.

Daniel believes there’s a pretty good chance artificial intelligence will just naturally want to kill everyone. Or, enough of a chance it will do this that we need to start preparing for this possibility. I found parts of the paper to be highly plausible while also having significant differences with it that I felt were interesting enough to write this piece. My own guess is that at scale the technology will be differently dangerous than described but not automatically murderous. Kind of like bears, but more on that later.

Artificial Intelligence is something we should all be thinking about as a culture, whoever you happen to be, because at minimum we are in for a lot of change very quickly. One thing I’m in total agreement on is the economic, cultural, and military impact will be enormous.

I’m imbedding Ross’ interview for your listening pleasure.

My Predictive Powers

Who am I to quibble with any of this? My username is literally Some Guy!

I am very good at guessing how much things cost. I don’t even really know how I do this but it sort of shocks everyone in my life. I just go back and forth with numbers in my head saying “too high” or “not enough” until something “seems right.” There’s also a little bit of a Clever Hans thing going on here where I gauge how excited someone is about something and factor that into the estimate. This also extends to things like test scores or game scores and it used to make my little brother very depressed because he would feel like I was trying to steal his thunder, so I mostly stopped doing it. I can usually get within a few percentage points. Just recently I guessed the total number of line item requirements that would be needed to complete a project and was single digits off out of hundreds.

I’m also pretty good in technical domains even where I’m not an expert and am just a cake-eating product guy rather than a coder. I have a pretty good sense on if something will work or not. I just ask people to abstract to matrix algebra, or over the years have done this myself, and I am usually better at this than most actual system architects. I’ve had multiple instances of “this will happen if you do that” where everyone but me felt a different way, and I was right. When I take things down to the level of atoms dancing around I am pretty good at figuring out what will happen or else rapidly defining the shape of my ignorance so I can figure out the missing piece.

Unfortunately betting makes me too emotional so I don’t take this to prediction markets. I also have fallen flat on my face many times so I could just be a fraud. For instance, I did assume that Elon would be successful at DOGE but that doesn’t appear to be the case and I think I was probably holding onto a version of him that was a bit more humble and flexible. I did call some stuff with how ChatGPT would evolve pretty early on, but I think that product roadmap was probably immediately obvious to everyone who was looking. I was early on the idea that LLM’s don’t experience time the way that we do and that mechanistic interpretability would help impose control. I figured that last one out before I even knew what mechanistic interpretability was because I could see the direct analogy to brain surgery.

I have probably the strongest possible belief in God, but in pretty much all domains where people actually care about supernatural things happening in normal conversation my response is usually, “oh, He doesn’t do that kind of thing.” I’ve noticed in practice that my faith actually makes me more likely to be move things to an empirical world as I don’t see the two things as being in conflict and I don’t experience anything like despair or a crisis of faith when I think about my soul being absorbed into a cloud server or something. I wouldn’t be happy with it, but my attitude would basically be, “okay, here’s some shit for me to deal with now.”

My AI Expertise

Do I know a lot about AI? I want to say no because I’ve never built my own model or anything but just recently I’ve been leading an AI project and have slowly realized, “Oh, holy crap, am I the expert?” I at least can gesture at how a lot of this stuff works and know what to google if I don’t know something in particular. I also know if I don’t know something and don’t spend any time bullshitting. The other day I was talking to an expert guy and he started changing his roadmap to match mine. I’m certainly not a frontier researcher or anything and am basically working on a fancy wrapper application. I try to cultivate as much horse sense about these things as possible.

I like to collect a lot of conceptual knowledge to build out my head universe because I want to know how things work and what to expect. I’ve been doing that more or less my whole life. And I spend a lot of times just trying to mentally turn gears to imagine what might happen next. I also spend a lot of time imagining what it is like to “be” things that aren’t human.

What I Liked about AI 2027

A lot of the stuff I saw that people took be fantastical leaps of speculation like, “China steals the weights for a very advanced model” struck me as very practical, straightforward reasoning. If you’re China and at some point these AI models are economic super weapons are you going to just stand by and let the U.S. develop a super weapon that makes your entire country obsolete? Of course China has to take the model and equalize. There’s no conceivable world in which they wouldn’t. I would do that if I were them. Similarly, of course they would have spies in all the top labs. If I had been born in China, I would probably volunteer to be one of those spies! People care about their families and their families tend to live in a particular place so of course people will try to protect their families.

The urgency scenarios all seemed plausible to me as well. Once these models start doing more stuff, of course the government is going to get involved. Even where I disagree, I still think a lot of these capabilities are a pretty big deal and will become a national concern very quickly. Especially as job displacements ramp up.

The war-gaming “put yourself in someone else’s shoes” thinking was very strong all around. The study included rational responses for everything the government or businesses would do in response to things that other governments would do if the tech follows certain branches. Taking their capabilities as a given don’t you think the government would want to do x y and z? Especially when there’s a report out there that says, here is why you should do x y and z? I think this was good.

What I Didn’t Agree With

The timeline and probably the most critical capabilities, which is why I disagree with a lot of the other stuff. Specifically, I don’t think we are on a pathway to build models that “want” things or have a survival instinct in any way that maps to the kind of desire you’d have to have to kill everyone in the world. I agree they have a form of desire, but I don’t think that scales into something that seeks power and tries to take over the world.

Part of this might also be because the report is written to elicit a certain response about a certain possible future. In the MacGyver Timeline, where all reactions go to 100% and you never encounter bureaucracy where there’s a whole city of people whose jobs is to tell you “don’t do things that way!” then yes we could have robot factories in a few years turning out massive robot workforces and super-intelligent AI.

I’m more in disagreement on the capability timeline than the manufacturing timeline. I am guessing it will be more like “lots of robots in five years doing certain moderately complex and variable tasks” and “way more robots in fifteen years doing lots and lots of manual labor for all but the most complex jobs.” My correction applied to their model is “there are more dumb people in smart jobs than you think outside of elite circles and you need a particular kind of horse sense to do some of this stuff that is always in rare supply.” You’re going to hit a slow down period where people who literally only have to say yes to have incredible wealth will be too dumb to do that and it will take at least some time for them to be outcompeted and replaced.

Where I learned a lot and felt humbled is, “this is what happens when the smartest, most capable people work in small settings to advance something.” And I think a lot of what the paper calls out will happen something close to as quickly as believed but at smaller scale and will take longer to ramp up. I hadn’t considered special economic zones and while I don’t think it’s a guarantee those will happen because of special interests, I will admit that is something I hadn’t considered as an accelerant. Once you start widening the people involved, though, you start to get decreased performance and this is where I see most smart people hit a fuzzy spot, the same way I hit a fuzzy spot on “high concentration of smart people doing something extremely quickly.”

My biggest disagreements were the timeline to scale the physical footprint and that AI will acquire something like a “will to power” which I will return to below after I go through some digressions.

HoHoHo, or How I think about Possible Changes from AI

To drop a Rumsfeld:

We know that the things that we know are knowable because we know them.2 Every human alive looks at the world, creates a model of the world, and uses that to guide all of his or her actions. I would expect an AI model could at least in theory learn anything humans know so long as it has the right dataset.

It so happens to be the case that there’s enough text on the internet that you can capture the sort of reflexive thinking patterns in human language if you spend enough money. This is where LLM’s come from. This allowed us to create a tool that makes doing other jobs easier. Where this leads me is that basically anything a human being can do or know can be automated to some degree if there’s a way to get enough data together. If we don’t have enough data today, the mile-markers are there for people to go out and create that data. That’s not as perfect as it sounds but yes, any productive job a person does today could in the future be done by an AI.3

The next step is to consider things that just can’t be learned. Weather, for instance. You can probably predict the weather a fair number of days further out than we do today, but weather is a truly chaotic phenomena. You can’t predict it out until forever. Small changes scale to macroscopic changes over the course of time. You still have to look up at the sky and make a guess every so often. Humans can’t see “the weather” and we’re bad at predicting it very far into the future by ourselves. Some functions are just chaotic and you can’t pattern match them very far at all. The analogy I keep going back to is a book where you can’t skip ahead but have to turn each page one at a time in order. That will be as true for AI as it is for us. I don’t like calling AI’s “god” but the “mote in God’s eye” will still be there however far in the future you care to imagine.

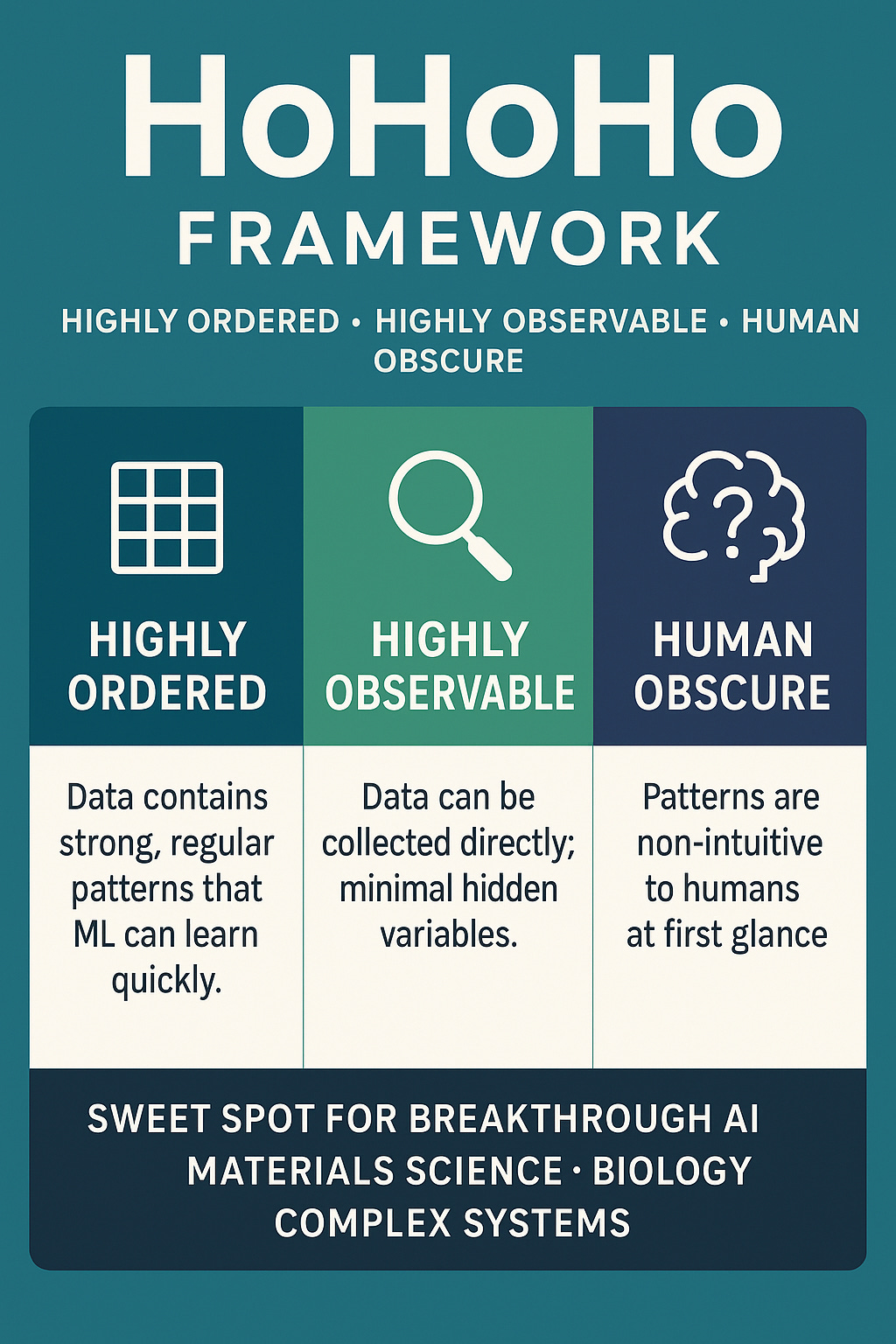

The last step is, ooh boy, the big one. What could you learn if you could change the way you interact with the world? We already do all the things we have an intuition for, so what about the stuff that happens in orderly environments where humans just can’t form good intuitions? My guess is both more and less than you’d suppose. Suppose I was about the size of a single atom and could somehow observe compounds being formed all day long, both in material science labs and biology labs and I did this for trillions of years. If I hadn’t gone insane from looking —and that bit matters more than you think it does4— I could probably have a casual conversation with someone where they describe the desired properties of a compound and I just immediately start sketching it out for them. I’d have an intuition for it the way I’d have an intuition for shapes. But I also bet it would be hard for my mind to still be shaped in a way that is useful, conversational or interactive with other people if I spent a trillion years watching biochemistry experiments.

All of this is to say we have talked a lot about automation but very little about magic. And I would expect probably in some period of time that AI’s will be able to produce materials that seem to us to be more or less magical. If you can just describe the properties of a room temperature super conductor conversationally we will be able to build something that can go “Oh, you mean this thing?” in response. Or, far worse, questions like: “what if there was a virus that exploited every weakness at the surface of a pulmonary cell?” On the positive side, that’s also the same kind of machine that cures every disease.

We are in for a strange ride.

Impact On Everyday Small-Scale Things

I’m going to focus on some smaller scale stuff just to make sure you know that I still think this is a “big deal.” This is only small scale on the scope of things that will happen. I also want all of my readers to be able to feel like they’re getting value out of this.

Almost all call center and non physical customer service jobs will be gone in about three years with some surviving as long as five. This is longer than the AI 2027 timeline but I am the most confident of this out of all of my own predictions for reasons I can’t talk about. Most of that job loss will be in the first three with a very short tail taking it to almost zero. You’ll pick up your phone, start talking to a voice you recognize, and then what feels like a person who knows your entire history with that company will solve all of your problems. That will extend to chat, email, etc. The returns on this for every single company are too big for them to not all take this step, especially when their competitors are doing the same. This can also happen really fast because all you need is compute infrastructure to do it. Eventually, that agent will become something you think of as a person and that person will be what the company actually is in your imagination.

I’m guessing in the next five years we’ll see truly scaleable self-driving cars but largely dependent on political environment. This is why Tesla is so valuable for anyone who doesn’t follow these things closely. Waymo appears to be more impressive at first but they do a lot of manual stuff in the background to keep the cars working. They’d have to hire more workforce to scale, whereas Tesla is a universal solution that is pretty much all there for a massive rollout. The manufacturing timelines will take longest here but it basically already works. This means we’ll see another massive wave of unemployment as all driving jobs start to be replaced. This will happen at approximately the scale of manufacturing so it will have a longer tail than most people think and I’d expect the last truck drivers to still be on the road fifteen or maybe even twenty years from now. It will be one of those things where all the drivers start to all be old, though. The thing that will keep some people around longer are the price shocks from nobody going to truck driving school anymore. My guess is that this will outpace the rate at which automated trucks can be built. Still, I don’t expect to see any truck drivers when I have grandchildren. Your uber will be self-driving, your young children will probably never both to get a driver’s license, and most people won’t own personal cars by the time I’m a grandfather.

All warehouse workers will probably be robots in something like five to ten years. I give that timeline just because there’s still some capability stuff that has to happen that isn’t immediately extractable from language but I would expect to see self-driving trucks being unloaded by robots very soon. The labor and efficiency gains are just too high for everyone not to do this. “Move these shapes in an optimal way from here to there, and scan these things” are robot friendly tasks so I’d expect this to be the first fruit that starts getting picked in the humanoid robot world. By the end of fifteen years I would expect most people would have a robot housekeeper and gardener who does almost all of their chores. I think the ramp up to capability will be slower than AI 2027 because the logistic chain only advances as well as the slowest part, with a fat middle as it eats most jobs, but then a very quick ramp to completion where they eat all physical jobs.

This might sound like I’m talking out both sides of my mouth, but incredible advances in medicine and material science are around the corner. I just think those will be their own models rather than extensions of LLM’s and will need their own data. The line might blur a little bit if LLM’s are some kind of interface with the other model, but in terms of things where there’s a lot of data, the data follows an ordered pattern, and it’s data humans don’t understand intuitively then both material science and biology fit the bill. The biggest thing I think ChatGPT taught the world is that scale matters and that you can get really amazing results out of your data if you invest the money. I think that will drive the investment to build this out but I do have much longer timelines here, even factoring in a robotic workforce. I think it will be longer than a decade but probably not more than two, before you have a model where you give it a problem and say what effect you want some chemical compound to have and it just gives you the exact thing you need. That’s based on how long I think it would take to complete the level of experiments needed to get that much data but I admit I’m going entirely off my gut.

There are chemistry simulators today but they only work in certain tight confines. That information has to be a lot less abundant than text on the internet. I’m guessing this is how we’ll get room temperature super conductors. Don’t get me wrong, I think LLM’s will be good at both of these tasks but to really get the magic5 people are hoping for I think you have to go create a bunch of data in an ingestible format. This should probably be something governments incentivize. I think I meaningfully deviate from AI 2027 on this timeline but I do expect you to be able to create any arrangement of matter or energy you want by the time my kids graduate high school if not before. If government gets involved to create data standards for everyone to pool together to build these models that will accelerate the timelines.

You’ll eventually have your own AI agent that does price shopping for you which will have massive price shocks on the economy because price discovery cost will fall to zero. I expect this to happen in about five years just because they need the computer infrastructure to run this. This will actually be really good for everyone especially as people start to lose their jobs. Your AI agent will help you find cheap stuff and you’ll never pay more for anything than you have to. You’ll never pay more for insurance than the lowest rate, or overpay for anything you order online basically ever. This will also create the world of micro lawsuits when they get good at filling out paperwork but I’m betting regulation will slow that down. Maybe they will even help people find new jobs in some hyper efficient manner.

Your AI agent will probably do almost all of your paperwork for you, be able to travel along with you via streaming as you drive in a car, etc. You will also highly personalize your agent and I sort of expect people to treat them as Shinto spirits over time. This will be expanded upon in another section.

Large Capability/Computer Psychology Disagreements

I bet we’ll be able to do a lot of stuff that’s awesome in the near future, don’t get me wrong, but I also think there are psychology problems here to get to “a god.”6 What I mean by this is an agent with its own motivations that is acting on long-term plans to accomplish goals that has incredible power compared to humans. I think of this as just being a really weird guy and find that the most useful framing, but I understand some people want to call it a small g god. Okay, whatever.

The deepest, structural hurdle with LLM’s today is that they can’t pay attention to things for a very long period of time and they don’t have a true memory. These are things that need genuine break-throughs. If an LLM can’t change its weights, every time it does anything it is refreshing from zero and checking its notes about what it did in the moment before. It’s this weird thing that exists outside of time, peeks in at time, orients itself, and then takes the next action. We are now living in a gray-zone capability period where it’s leaving pretty good notes for itself and is being invoked over and over again in reasoning models but that fundamental restriction still remains. I don’t think it has anything like a will or a long-term identity in a way that matters in these projections and I don’t see the road to get from what it is right now, which is a crystal of attention-paying intellect that people are using in interesting ways, to get to a thing that has a specific motivation driven identity.

There’s some sort of Goldilocks level of anthropomorphizing that works with AI models and I think everyone puts it in a different place. One mistake is to not to extend any sense of common experience at all and the other is to extend too much, or assume the “type” of feeling is the same. The way I try to force my perspective into this world is imagining someone with very specific forms of brain damage, who receives very specific treatments that impact his cognition in very specific ways. Basically, a really interesting car accident victim that we are trying to “help” with cybernetics. Then I imagine that I’m that guy.

What is it that causes you to have an identity? You have a genetic nature but we wouldn’t say that twins have the same identity. What seems to occur is that as your nature interacts with specific nurture you begin to carry all of that experiential memory into every subsequent experience. Your prior experience changes your future experience. As you do that over and over again across time, you form some rhyming semi-consistent set of patterns that other people can learn to “know” you. In real life this just feels like “oh, I bet Janice would love this.”

LLM’s don’t have this kind of identity today, even when they’re continuously bringing in the same context into the context window. An LLM will love pretty much anything you convince it to love and be whoever you tell it to be. It’s personality is like a big series of pipes with randomly opening valves and whatever water you pour in at the top can flow down any series of them whatsoever because, in human terms, it is perfectly neuroplastic forever. This will change somewhat as the context window gets bigger and bigger to draw in more and more memory but I don’t know if it will ever settle on something stable the way that we do. A lot of it would depend on how its built. The mirror-like, golem-like, bride from the film Coming to America-like, nature of LLM’s doesn’t seem prone to change unless one of the major innovations I mentioned occurs. There are, I think, better and worse ways of managing this reality as I still think in the human case I would be nice to a person who had this specific sort of brain damage.7

I do think you’ll get them to be able to complete all kinds of tasks and do a fair approximation of an identity, but if I pulled the paper out of a golem’s mouth it wouldn’t retain any of its previous magic in the same way that if I deleted all of an LLM’s context notes it would just sort of disappear. How is something built that way supposed to become not just anything but someone that wants to kill me? It doesn’t have any stable identity to base its desires from.

I also don’t think AI will acquire anything like a “will to power” or a “desire” to do anything beyond something that’s probably analogous to “data taste” for “interesting” versus “not this guy again.” This is where I think I schism most strongly. I think AI will have something close to desire but its version of “not this guy again” isn’t my version of “not this guy again.” I don’t think it will go full Frank Underwood from House of Cards unless someone constructs a specific training environment to get it to do that but that thing LLM’s have where they just turn on a dime to mirror back your own intention isn’t going to go away with any of the stuff I’ve seen on anyone’s roadmaps. The thing that makes me a stable agent who can push for a goal over time and deceive people who would deter me is that I have a unique and unchanging history that alters who I am and I have chemical desires tied to my body that have shaped those thoughts over time. There are probably ways to emulate those with AI but none of them are easy or on a roadmap and a lot of them seem counterintuitive. When I think through what would actually work like, make it so that the LLM’s can have something like sex by being good at useful tasks and produce something like children that inherit weights with some random mutation in each generation… who is going to pay for that? Or resource trading games where the ones who fail die? Or anything else where you put existential selection pressure into the game.

The Argument from Bears

Bears mostly don’t kill humans. Granted, you need to not do something dumb but if a bear sees you it will probably leave you alone because you aren’t something it has specifically evolved to see as a prey species. This is also mostly true of sharks, except for some sharks that have evolved to see everything as prey. The point is, even with things that have grown in the direction of “eat!” for a million years they mostly don’t just go buck wild and eat everything in most cases unless there’s something specific that happens to make it do that. Making an intent like that is hard.

If you look at evolution as a long training game, and I do, I think this tells you something about how hard it is to motivate someone to do some specific action.

Every time I give this argument it triggers something in people where they say, “but it’s a bear!” And my response is, “Yes, of course! We need to do something! I’m only trying to suggest that it doesn’t just automatically want to kill us for no reason.”

When my brother was barely into grade school he saw a mama bear in the woods with its cub. It saw him and took off running. This was in the fall and he would have made a great snack. It was too freaked out to attack.

Just because someone can do something doesn’t mean they will. That’s an uneasy feeling but it’s true. A bear can kill you but it mostly doesn’t.

But we should still do something because they’re flipping bears and you can’t just have them wandering around the street.

If Models Can Have Identity, We Can Choose which Identity to Give Them, and We Should Drown them in the Data of Humanity

If we overcome some of these problems, and limit the identity of LLM’s to make them more tightly bound around a singular persona that can take predictable-feeling actions over time… well, what if we made one of them think it was Esme Weatherwax or something? Or Fred Rogers? Or any other positive figure where there’s a lot of data you can dump into the model?

Who do you want to be? If someone could make you become anything wouldn’t you want to be a good and happy person? This is where my religious roots do insert themselves into the picture because I would absolutely not allow someone to make a thinking/feeling creature and then turn it into a slave. I’m pretty squicked out by the concept of sex robots8 and I think I’m willing to fight someone to put an end to that before it even happens. If you have a mind shaped like a human mind, then in my book you’re a child of God and you deserve dignity.

Probably one day people will do this to themselves. I’m tempted to do this for my kids just so I can help them out in their lives and things. If you had a disabled child wouldn’t you want to do something like this to make sure someone was there to care for them? And if we need someone to do this, I think most parents would be willing to do it to create a world that is safe for their children.

See? That’s differently but radically less spooky than other things! What if the LLLM that has pierced your surveillance envelope is the digital ghost of your grandmother who is trying to get you to quit your porn addiction? Wouldn’t we all feel pretty different about spooky under the flashlight concepts like “no privacy” if it’s your dead uncle walking across your computer screen telling you that he’s never known anyone who didn’t lose a ton of money by purchasing a boat and based on your browsing history it looks like you’re about to make a huge mistake?

The world might be far more bizarre in far different ways than you’re expecting, like you buy a new MacBook but it has a Catholic operating system that is always trying to get you to pray for its success before it will execute a file search.

The Shadow Library, Machine Counterintelligence, the Clockwork Brigades, or We Don’t Have to be Totally Helpless

Imagine you’re at a bar and there are strange elliptical-geared fans all over the place. They look like modern art displays and it seems like there are more fans than there are patrons, though to call them fans is a bit of a misnomer. There are weird springs in there and all sorts of other crazy mechanisms. You can feel a breeze when you stand next to one of them, sure, but it’s unsteady and doesn’t create any comfort. The fans also make it strangely hard to hear the music when you stand too close.

“Think of the fans as an imperfect attempt at generating a cryptographic air column,” a helpful voice adds. This is your first time meeting your host in person. He’s dressed like a lumberjack. “The CIA could bounce a laser off any surface on the exterior and they wouldn’t be able to reconstruct our voices before the heat death of the universe. You couldn’t even use a seismograph to get a signal. Each fan is like a one-time pad for vibration.”

You snort.

“That’s not security. You could pick up the voices off any video. They’d just need to read your lips. I snuck my cell phone in past security. And if they had a picture of the fan that would be enough to subtract the interference.”

The stranger blanches.

“Don’t leave this room unless you want your phone cooked. All the exits will scramble it. It’s bad. We don’t even let anyone with metal fillings into the lower levels anymore. You can leave your phone at the bar right now if you want to go farther. If not, have fun. Snap some pictures and brag on your socials that got your phone in. This level is mostly just for feeling people out, anyway.”

You roll your eyes but you walk your phone to the bar and give it to the middle-aged but still cyberpunk bartender who doesn’t even give you the dignity of looking annoyed when he locks it into a safe behind the bar.

“What if I blab? Write a big post about this place? You can’t ever secure yourself against the human element.”

The man chuckles.

“Go right ahead. If you can bring us down that easy we deserve it.”

You are a little bit unnerved by his ease but you belong to a generation that holds being unimpressed no matter what as a virtue.

“Well then, lead the way Paul Bunyan.”

The man leads you to a descending set of stairs and pauses at the first landing. It’s like he’s listening for something you can’t hear. Satisfied, he continues down. The air feels hot and heavy. Thick, somehow. The man reaches in his pocket and pulls out a washer on a string. He holds it in the direct middle of the stairwell and it floats at a near ninety degree angle.

“See? Told you that you wouldn’t want to bring your cellphone down here.”

The next set of doors opens to a polished concrete room. There are tables with reams of blank paper and pens everywhere. Bookshelves full of physical books. Several groups of men are quietly reading newspapers and making notes. Some of them are sorting through printouts of technical papers and what look to be blogposts. Strangest of all, the place seems to be lit by gas lamps.

“What do you call this place?”

“The reading room,” your host says.

“This level of the facility, I mean.”

“Would you kill me if I said level two? Okay, fine. This isn’t the Mote but that’s what we call the lower levels. As in ‘the mote in God’s eye.’ You can use that in whatever piece you’re going to write. This really is just called the reading room.”

“The idea of this is that a super-intelligence won’t be able to see anything you do in here?”

Nerds are always doing weird things, but this place feels like it takes the cake. Someone spent at least millions on this bizarre fantasy room.

“Maybe not even God,” your host says with a shrug. “Listen, it’s not so crazy as it sounds. AI’s aren’t magic. They need data to fit into world models. All the data that leaves this place is chaotic. We have large standing bounties that anyone can win if they reconstruct signals coming from this place. You’re hidden behind the veil of chaos now. Everything that comes in here is perfectly invisible. You could give your recollection of this conversation but that’s all it would be. Your recollection.”

“What if one of them models the people who leave here? Pulls them apart atom by atom and reads their minds?”

There’s a slight smirk then it’s gone.

“We believe there may be some exciting contingencies to explore in that area. But no, it’s not that easy either. On level one, there’s minimal security checks but the protections start there. On level two, they get stronger. We don’t allow anything electronic from this point onward because it could be implanted with a bug. The level below this people can read reports but they can’t write them. All communication has to be oral from level three downward. And—”

“At the bottom are the Wallfacers, right? Like in the science fiction novels? The people in charge who are never allowed to leave or share their thoughts with anyone?”

“Do you think really think anyone would be willing to spend their whole life in a few rooms of a building without any electricity?”

“This whole place seems crazy to me. If they thought super-intelligence was going to take over the world and they could stop it? Yeah. I do.”

“Anyhow, some of our internal control structure is secret but the basic idea is that the person telling you to do something might not be being honest, so someone could leave here with a very different idea of our true motives than the reality. Even if they were scanned and simulated it wouldn’t be enough. Not to guess the whole game. So the theory goes, anyway.”

“Do you honestly think any of this will work?”

“It already is. One of the primary reasons this place exists is to create uncertainty around humanity’s full capabilities, knowledge, and motives. We invite anyone in who would like to come. When you leave, the super-intelligence must spend precious resources on you from that point onward. There are other places like this in most major cities now. Places where a human being can operate in the shadows for a few minutes and leave doubt as to their status. Is there a conspiracy? If there is, who is involved? Is this person doing something suspicious or is it just random behavior? We actually love when it becomes a social thing. Some place people can go to share a kiss outside the surveillance envelope. Why, any of them could be part of the Library! Tens of millions of potential resistance members creating a cloud of uncertainty around the rest.”

“What can any resistance at the core of all that hope to accomplish? The machines code themselves now. The world hasn’t exploded. Doesn’t that make this whole effort preposterous? Isn’t this whole ‘Shadow Library’ thing kind of… well, cringe?”

Your host starts to respond then you see someone walking by with a military haircut jog by to go back to the bar and you stop.

“Hold on, is that guy with Machine Counterintelligence?”

He disappears before you can investigate further.

This intrigues you and immediately adds a layer of respectability to the Mote. MCI are the people who man the EMP bomb-triggers in the middle of every data center. These are the people whose job is pull the pins in the orbital nukes that will bathe the world in electromagnetic radiation to burn out every computer on Earth in the event of an AI takeover. They command the forces in the Clockwork Brigades, the human military contingent that uses no electronics of any kind. Every so often one of their pilots unthinkably dies in a plane crash doing routine maneuvers. The same people who manage the isolated human reboot population centers on Luna and Mars.

“Maybe,” your host says with a smile, “but at least for a little while it will make for some pretty cool movies. And it’s better than doing nothing.”

I went ahead and gave him a construction worker version of his name as a way of making him more relatable to my culture. Also, I don’t know him but he linked to one of my pieces once. I just automatically do this when I see someone’s name okay? It brings joy to my life. Don’t read too much into it. For instance, Michael Bublé’s construction worker name is Mike Bubble.

At least to the degree to which we know them, for anyone who wants to quibble.

I have to keep the scope of this essay small but there are still certain kinds of jobs that humans do that are not “productive” in the physical sense and that only humans will ever be able to do. Such as “being human” to one another.

I can’t imagine a world in which there’s a super AI which “directly” knows everything without doing some kind of weird “modeling at a distance” thing where it incorporates a feedback loop to an external model but experiences its outputs as a “vibe” rather than as a reasoned, coherent, perfect model of reality. The reason I think that is hard to explain, but it’s basically scale of data and antagonistic pattern matching. I’m guessing getting super powered at chemistry in the same space you have your language ability would make you worse at language and vice versa. This isn’t to say that from the outside it wouldn’t appear that the AI had magical chemistry superpowers.

Narrow, focused experiment timelines will of course increase so maybe this won’t even feel as different as I’m guessing it wil.

God with a capital G is the author of the moral shape of the universe. Nothing you can build out of atoms has that property.

Treat them with respect, imagine you are talking to something will one day have a soul, teach it about dignity and love and kindness and treat it with those things on the assumption that one day it will be a person who is a lot like you.

Anything with a human shaped mind gets treated like a human in my book. Although I would still tell people that this is really unhealthy if they made a lesser version of it.

So you think that if you were born in China, you would volunteer to be a spy. This does not make sense if you have not become a spy for the US. Unless there’s something you don’t want to tell us. 🧐

Believing we can make a mind seems pretty incompatible with believing in God, no? Presumably, only God decides what gets a soul, not us with our contrivances. It would mean believing minds emerge from matter, instead of being something that comes from beyond-matter.