How to Make an Information Super Weapon

A Technical Deep Dive into the Components of a Trust Assembly

My primary purpose when starting this Substack was to encourage a company, like Substack itself, to implement a series of features into something I’m now calling a Trust Assembly.1 The first purpose of a Trust Assembly is to create an extremely reliable news ecosystem on the internet. I don’t want any money for this, including from you. I think it’s the sort of thing that people will be more likely to believe in if the person who designed it didn’t get paid for it. I’m a capitalist, don’t get me wrong, but I’m a capitalist because I think that capitalism serves humanity. However, I want even the most die-hard Marxist to groan when confronted with my system and grumble “yeah, I guess that works.” As the name implies, trust is critical.

However, the audience for that kind of thing seems pretty small. Until I started posting stories about my personal life, I had something like fifty subscribers. We’re a few shy of five-hundred now. I also don’t think I can escape my general tendency to tell stories and be a sort of profane Garrison Keillor, which seems to be much more popular. So, I’ve decided to let one serve the other. I’ll do biographical stories, very occasional fiction stories, to draw eyeballs and then long explanatory posts like this one.

Is it weird that I have had a very odd life and am also, entirely separate from that, somehow a pretty good process engineer? Yes, it is. What can I say? It’s a weird world.

I’m going to explain how a Trust Assembly will work to you, step by step. No weird Illuminatus cloaks, gold medallions, or secret forest rituals. You should be able to read my world-changing plan and understand it the way you understand why two and two make four. If you don’t agree with it, you can leave with honest disagreement. Anything else is cultish. I’m not asking for blind faith, only attention.

At a high level, a Trust Assembly is simply taking the stuff we know about separation of powers, blind review, group dynamics, and economics then wrapping it around the internet. That’s it. At no step in the process do I suddenly move into the realm of genius where you cannot follow. At the end of all that, you’ll understand what are the right carrots and sticks to compel people to do radical things like admit when they’re wrong before they get called out on it, pay attention to things that matter, and then actually accomplishing things in a transparent manner everyone can follow and verify.

I’m not claiming this will be perfect. I’m not a utopian. Nothing is ever perfect. Injustice and impropriety will be with humans for as long as we’re human. The aim isn’t to change human nature but to point it toward more productive ends. Done the right way you can construct an inescapable game that ensnares everyone and ensures it’s in everyone’s best interests to behave in a virtuous manner when reporting the news. Even if we can’t be perfect, we can be much better.

I can’t both make this short and fully explain it to you, so I’m going to err to the side of full explanation. Like I said, openness is the first building block of trust.

This piece will follow the general emotional outline:

What’s the big deal? You’re describing a Chrome Extension! I Feel Let Down.

LOL! What do you believe in democracy or something?!?!

Okay, now convince me you could build a difficult to game review system

Umm… when are you going to address filter bubbles, bro?!?!

Dude, nice idea, but no one’s going to pay for this.

My eyes are crossing when you talk about reputation and money together

Oh, they’ll just shut you down… nevermind, I guess they can’t (this part is new)

Holy shit

Holy shit, is this an AI company?

So what’s next?

What’s the Big Deal? You’re Describing a Chrome Extension. I Feel Let Down.

Suppose when you opened the newspaper every morning, you were the recipient of all the collective wisdom of your closest and most trusted friends on each article. Hundreds, even thousands of people that you specifically trust, went through the paper with a red pen and added clarifying context, made error corrections, and entirely replaced certain headlines or phrases to better match your shared understanding of the world. They were transparent about all of this. You can go to the back of the newspaper and all of their reasoning will be explained. If you don’t agree with their reasoning or rationale, you can swap in a new group of friends to perform this service for you.

From the moment you picked up the newspaper in the morning, you would be the recipient of all of this wisdom. Thousands upon thousands of adversarial red pen notes all over what people were otherwise asking you to take on faith.

Ultimately, this would feel like a bespoke editorial team sitting on top of an existing newspaper. A newspaper is already a collection of people getting together to try to inform you about the world. The newspaper is already edited. This is simply making sure that the news is then being edited in a way that you find to be honest by a group of people you trust as guardians of truth.

Some of you are already raising objections. Please read all the way to the end before you raise your first objection. I’ve thought this through. One step supports the next. This is only step one.

What I’m describing above is, of course, impossible to do with a physical newspaper. The printers won’t let you and there’s not enough time or red ink. You couldn’t get together a team of people quickly enough.

But could you do this with something that you view online? Could you do it with a webpage? Yes. Of course.

So, here’s my Idea for an Information Super Weapon:

You know how you will be browsing something on your desktop computer and then oops, you accidentally press F7 on your keyboard and it shows all that ugly html code and style sheet stuff? And you’re like “wtf, this is terrible, what do I do to make this go away so I can get mad at this CNN article?”

Well, all of that html controls what is rendered on your screen. So it will say “Put up this headline with these words” and “use this font” and “Make the font this color.” My idea is that instead of having the html do all of that as the page owner intended, we go in there and we mess around with it for the specific purposes of removing what we all collectively know to be “bullshit.” This is where the red pen that you could never use on a physical newspaper can be scaled to the level of the entire textual internet.2

You can do this because, at the end of the day, for you to see text you have to have that text passed to your computer. So all you need is a database somewhere with the web address, the text you want to replace, and the text that will take its place. Even if the webpage owner wanted to stop you, they couldn’t. Your locus of control would be on top of the very thing they must use to send any information at all, which is the information itself. As soon as they have sent you enough information to load their webpage, the trap is sprung.

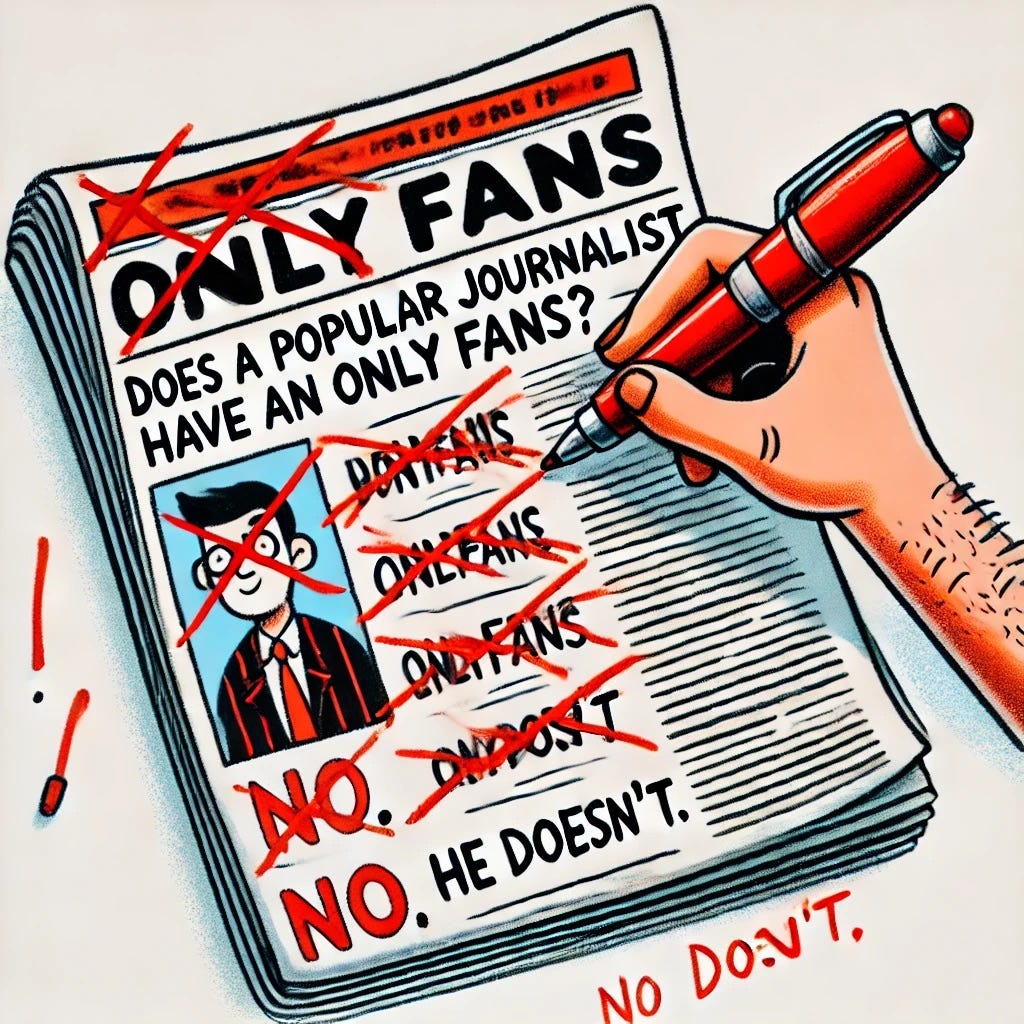

In this future, an attack article with a headline like “Does Matt Taibbi Have an OnlyFans?” would instead say something like “This is an Attack Article with No Useful Information.” And then it makes the new headline red, turns it into a hyperlink that leads to an article that explains why the changes were made, shows you the old headline, and lets you see who made that new headline. Transparent, honest, and as you will soon see, reviewed with strong incentives to be honest.

I’m starting with the user experience first because it’s the bit that makes the most sense. It’s the part I can start with where you’ll understand what a person would feel when using the system. It’s going to take a lot more work to make the system honest. This is only the part where things are put in front of you. Suffice it to say, if you read until the end, you’ll understand why someone won’t be able to make their own filter bubble completely divorced from reality. The system just won’t reward it. The only way you’ll get seen by a lot of people is by being honest.

The idea here is that you would share these headline and annotations with many different people. This isn’t social media or something you go to on a specific website or app. This is something that would follow you across your entire browsing experience. It would live natively in your browser. Hopefully, in time, across all of your applications. Those back end tables could be made available via API to every social media company. So you open something up and before someone had a chance to attack you with some bullshit, someone else went out in front of you, updated the garbage, and defused the rage bomb before it could explode. That person also left documentation explaining why they did what they did, with an ability to challenge it if you think it’s wrong.

But it’s even deeper than the headlines being replaced.

Imagine if every time some politician somewhere thought up some new law called “The Increase Male Virility and Big Boobs Act” it was replaced with the words “The Act to Decrease Microplastics Pollution that is Unlikely to Work because this Group Has Never Made Something Function Before Despite Being Funded Multiple Times” and that way nobody could even casually mind-control you, no one could hijack your limbic system, because the people you trust went in there first and removed the mental manipulation. Those polite words we use to hide manipulation like “spin” can suddenly be “unspun.” “Angles” can be “straightened.” Again, all reviewed, all documented, all challengeable.

I know what you’re saying. Yes, object away. We’ll handle them one by one.

“This is completely unrealistic because you won’t be able to get enough people to do this across the media landscape.”

“You won’t be able to get high quality reviewers and the people who will want to do this all day will be insane.”

“This is literally just a browser extension.”

And “this is going to make everyone angry so no one will want to do it and everyone will break off into their own version of it to serve up propaganda to their own side.”

To which I will rub my forehead and say “the point is to piss people off in such a way, with a specific rule system in place, that by fighting with each other, and following their own selfish motives, they do the right thing completely inadvertently. We’ll build the engagement rules, incentives, and the arena the users fight in so that the only way to win is by serving the truth. The point is to make the system unavoidable, trap people inside of it, and then make them happy to have been trapped.”

As you will soon see, I’ve really, really thought this through.

LOL!!! What Do You Believe in Democracy or Something?!?!

Yes, I know the truth isn’t decided by democracy. Truth is an eternal, infinite, and invincible thing never fully to be grasped by humans. That’s cosmic truth. Truth in and of itself. I’m talking about practical truth, where you don’t have time to figure everything out completely on your own and you have to work with other people to ask “Hey, is this legit?” All I’m suggesting here is that there are smarter ways to go about verifying if the person you asked is correct.

The first part that usually gets peoples back up about this is the idea that someone like Nina Jankowiez is going to make themselves a “Misinformation Czar” somewhere and then unilaterally use this kind of a tool to publish government propaganda. Or some other crazy form of propaganda like the Earth being flat. Then it would just be self-appointed people deciding what is and isn’t true, as if the truth was something somebody indisputably owns without effort. All based on some weird notion that you can arrive at truth by applying a simple mathematical formula without need for discussion or verification of facts, or stance, or the desires of specific polities. Arriving at truth is hard and nobody owns the truth. All of us can only chase the truth. The goal is to make people chase it.

The second concern is that people will form news-cults and never leave their specific biases and then endlessly chase their own tails further and further away from the truth because people stumbled into saying stuff to make the group happy and the rewards were too strong to ever exit that loop.

No.

Take one minute. Believe it might be possible to fix something well enough that it stays fixed instead of throwing your hands up in the air. Think about what rules you would put in place to stop people from doing those things and I’ll bet you get pretty close to my solution.

This tool would have follow features the same way you do for your social media. So if you want to see what Matt Taibbi thinks the headlines should be, you’d follow Matt Taibbi. And whoever else, or whatever other organization, you trusted to make headline updates and article annotations for you. For me, I’d personally want an organization like PirateWires to go out and do something like this. Say what you will about Mike Solana, literally the richest man in the world,3 but he finds the sort of beaten down, pragmatic broken-hearted libertarians that I trust to explain in detail what is actually going on with a problem somewhere. That’s my bias, and I’ll own up to it.

But my biggest of all biases is that you also have the right to your bias. The thing I love the most is a level playing field. You have the right to specifically work with the people you specifically trust. The Freedom of Assembly is right there in the Constitution with the Freedom of Speech. The same way you can buy whatever newspaper you want or turn on whatever news channel you want, you should be able go get the clarifying information of whoever else you want. This is the first carrot in the system. People need to be allowed and encouraged to form groups of like-minded people that they trust.

This is only practical. It’s not like by forbidding people from following trusted voices you make them suddenly trust someone else. No. That’s not how trust works. Trust comes from a solid track record, shared history, and shared perspective. Pravda, the newspaper of the Soviet Union, and the Russian word for Truth, was notoriously untrusted by the people. If you lie enough, people learn not to trust you. You can claim to be the truth all day long, but only the truth is the truth. And when you start being wrong all the time in ways that matter to people, then people rightfully lose trust when you claim something is true. In fact, people eventually came to understand that if something was pushed hard by Pravda that it was almost certainly not the truth. The claim of truth became a strong symbol of deception.

The only other thing I’d say here before getting to the stick in this dynamic is: yes the media landscape is really big so you’d have to do stuff to automate or expand the scope of follows. You don’t want popular sites with lots of articles going unchecked because otherwise your system would be kind of useless. So you’d want to implement a feature like “people who are trusted by people I trust” extended out for some number of links in that chain. These people would also have their notes displayed to you. You’d also want to know upfront how far away that person is from someone that you trust, like the difference between one hop and eight hops. Maybe in color coding? Anyhow, you could do this systemically and probably create a pretty big footprint because that’s how exponents work.

If that’s not enough to prime the pump… listen, I don’t like this part, either. Some of these you could also flat out automate with ChatGPT or another LLM. Scrape the articles an outlet posts everyday, then tell ChatGPT to create a new headline, update that into your tables driving these updates, and bam! All of the news media is now under your thumb. You might have to get a human in that loop to care for exceptions but probably not many. A half dozen people doing error corrections could probably hit every major news site in the English speaking world.

None of this is pie in the sky technology. It’s just shared tables and then something to shove the information in those tables in front of your eyeballs.

Now, time for the stick in this system. The next big problem people raise to me on this is “People will just use this to make propaganda for their own side.” And yes, there will definitely be pressure to do that. Again, try to imagine the kinds of rules you could put in place to prevent that. In the same way that a group of people in an investigative team can find the truth and a mob rioting in the streets cannot, the review system will automatically wring order from disorder. You can make groups of people coherent simply by creating a few rules.

When someone creates a piece of information for the system, it will go through two levels of review. At level one, it will go through a review of other people who think like you. Believe it or not, this is anti-extremist. Read the next section for an explanation as to why. That said, the first level of review will ensure that a large portion of your particular group agrees with that piece of information and that it fits their worldview. The second level of review, and here’s where things get interesting, will be performed specifically by people who are not in your group.

This is the stick. In order to be successful in the system over the long run you have to both say things your group agrees upon and things that other people can agree upon. If you don’t do that, then only your group will see your information and your group will become smaller and smaller, with less visibility, over time. The point isn’t just to grab your base but to grab other people from other groups, or to ally with other groups to have the biggest impact. Your group and other groups will be at war with one another to grab eyeballs and no specific group will have the control over who decides winners and losers in that system. We’ll have some more on this in a bit.

Okay, now convince me you could build a difficult to rig review system

What is extremism? I mean, mathematically. What is the mathematical formulation of extremism. No, I’m not Terrence Howard. There’s an actual answer to this.

Think of a bell curve, that thing they usually use to talk about IQ’s, at least in the public mind. Now instead of imagining that curve measuring the distribution of intelligence, imagine it measures how much people give a fuck about a particular subject. Extremism happens when the people at the far right tail of the curve end up being in charge of all the people who simply happen to be on the right side of the curve. If you care a little about something, and the people who care way too fucking much about something are calling all the shots, that is extremism.

This next part is dumb because it’s obvious, but saying it this way is what makes the solution apparent. How is extremism maintained? Whether you’re a blue-haired SJW who think literally no cop ever has done the right thing by anyone, or a red-meat eating, Texas-shaped belt-buckle wearing young Earth creationist, the answer is the same. And it’s so obviously stupidly dumb.

Extremism is maintained because extremists only share decision making authority with other people in that small part of that particular group. So out of the thousands of people who have opinions like “Please can we not kill ostriches for no reason?” the people who think “the ostriches are gods, we must kill all humans to worship them” end up being in charge and they only share power with other ostrich worshippers. Partly because people who care about something a lot are more likely to be willing to devote large parts of their life to it and partly because if you’re psychologically normal you’re likely to be busy at a regular job with no time for ostrich activism. Left unchecked, ostrich policy becomes some weird reflection of a very small group of people who have a crazy belief system because nobody else who cared enough about ostriches fought hard enough to take power from them.

The solution to this is to have a review system in place where that small group can’t self-select and will be forced to moderate their position in order to win followers and views. The reviewers will be chosen randomly from out of the entire group. The people who care the most about the topic won’t be able to steal all the power. You’ll be forced to meet standards set by the general consensus.

When you follow Matt Taibbi you also become part of what I think of as a “Trust Assembly” of people who are aligned behind certain values. In the future, it’s probably better to just ask people what they believe and help them to sort that way. That said, someone has to implement this somewhere and picking up who people are listening to right now is a good way to initialize. So anyway, you follow Matt Taibbi and because you’re part of this system and this system is democratic, you get to contribute notes as well.

BUT….

The other people in your group get to decide how valuable your contributions are. So if you write a bunch of annotations over an article that are garbage, someone gets to come along and say “these aren’t valuable.” Actually a minimum of three randomly selected people get to make the decision.

The in-group as a whole will be able to curb your reach. Over time, people who speak for the group will broadly reflect the group’s values as a trust voice.

No, you won’t be able to rig the jury. Or brigade the group and take over. Or any of that other stuff you’re thinking of doing. Why? Because I already built this in my head and tried to do that myself and figured out how to work around it. Also because if you do it once, I’ll just patch the hole later.

On a place like reddit, X, or even Notes, there’s a wide open voting system. Anyone can see any piece of content then like, upvote, or what have you. This has certain advantages for some forms of content but it also doesn’t allow for deliberate review. Simply by drawing lots of attention, or having a quality that makes it interesting to a small subset of people, you can get wildly uneven voting patterns because numbers are all the matter.

Instead of being wide open, your information first gets reviewed by several randomly selected people in your group. People who didn’t stand up to say “I want to review something.” Remember that bit about extremism at the beginning? And how extremism grows because you only allow a small number of people at the furthest part of the graph to make decisions? Well, now the review process will take the inputs of random people in your Trust Group. The entire bell-curve is at play. That way you can’t just have a bunch of people gang up on some piece of information they don’t like and discredit it. We’ll get into how we incentivize people to do this in a subsequent step. I know this isn’t something people are clamoring to do.

And now you’re thinking up other schemes. Other ways around it. No. I thought of those too.

There’s a decision engine running in the background on the jury selection and it has rules about how people can be selected. Everybody gets to know what the rules are. Nobody can be drawn into the jury if they joined the system too close to each other. So if a thousand of your friends make an account the same day to downvote something, only one of you can make it onto the jury. Or if you overwhelm the numbers of the voting pool, again, we’ll have a rule that says “okay, you’re too new and the old people will be more heavily selected.” Or if two people live too close to one another, which you can get via proxy information, they can’t both be in the review group. And again, the selection is random. You can’t simply summon up a bunch of people and say “only we get to make decisions.” It’s not that hard to make rules to make this really strong. Even doing something like “Okay, you now have to wait a month after signing up before you’re eligible” immediately weeds out the bulk of people who are going to try to corrupt the system on a whim because they were pissed off about something. No one who internet trolls is going to wait a month to get revenge on a system that will immediately down-regulate them.

This could all be an open, auditable process, so simple, transparent and powerful that when the CIA is trying to sell another war to the public and tries to game this system they simply have to say “well… fuck.”

Umm.. when are you going to address filter bubbles, bro?!?

After that first level of review, your information will be reviewed by people across other groups. This is the same mechanism used in Community Notes on X. As social proof that I have good intuition on this topic, I suggested that as a review mechanism earlier on this substack. Read my manifesto for more. That way, you’re not only talking to your own group, but you’ll have a chance to convince other people. This is the main prize your group is going for. You want to convert as many people to your belief system as possible. As we will discus in later steps, we’ll create financial incentives around this as well.

Everyone who is using this system is going to see three things. Imagine instead of just red ink on that newspaper we discussed in the first section that there’s blue ink as well. Users will see the original article. They’ll see their own group’s information overlaid on top of the article. And they’ll also see the consensus view information overlaid on the article. The biggest prize you can win in this system is to have the consensus note that everyone else sees. That’s what all the different groups are fighting to win because that’s how you grow your group, and as we’ll see, your earning potential.

For casual users of the system, who don’t want to sign up for a group or perform review, or anything like that, the consensus notes are all they will see. My guess is that at maturity, this will be the bulk of users. We are all early adopters of a newsletter platform. We’re dorks. Eventually, your grandma will use this system, even if she never goes beyond seeing the little notes.

Think about what this kind of game incentivizes. To get the maximum reward, you need to be able to make an argument that people find believable even outside of your own particular in-group. You can still have your own perspective, but only the truth has the property that all people can recognize it. So all perspectives will have to orbit the truth to be successful. You can have your own perspective, but not your own facts, and your perspective will have to hew very close to those facts to be defensible.

Like I said, Truth is cosmic and invincible. We can’t touch it directly. But we should all try to approach it.

Dude, nice idea, but nobody’s going to pay for it

Have you ever had someone tell a provable lie about you online? And you could prove it was a lie but it didn’t really matter because the lie had already run away and gotten stuck on everyone? Most of the time you should just ignore it. I’ve been flamed before. It’s not the greatest feeling but after it has happened to you a couple of times you grow a thick skin. Other times, you don’t have that option because the lie is ruining your life. On a recent episode of Blocked and Reported, Ben Dreyfuss told the story of a guy whose life was ruined over one tweet with ten likes. How much do you think he’d pay to have a chance to tell his story so that tweet had context notes?

On the other hand, what if you owned a new media company and a guy who has a really complicated personality started to say that you have a “Nazi” problem. He’s just out there saying “Nazi, Nazi, Nazi” and your company’s name in the same sentence, but really your company, even theoretically, only makes about $4,000 a year from Nazis? And even that figure is making a lot of assumptions. More than that, the “Nazis” in question aren’t really making that much money either, most seem to be mentally ill, and nobody even read them until the guy who accused you said something but none of that context is in his article. Well, there’s no trusted independent source to prove that you’re actually in the right and that the guy is being unfair. You could spend a million dollars and it wouldn’t help you. The people who are against you would be unmoved.

Or, hold on, what if you had really strong political beliefs? And you were part of an activist group promoting a cause and you were trying to clarify what the public believes? You must believe in your cause and that it is true, so wouldn’t you also want to pay to have false news articles corrected?

Or what if you own an electric car company and every major media outlet says you have a giant recall and quality issues because you changed the font-size on a screen in an over the air software update? How much would you pay to have that context noted?

I know we all grew up watching Captain Planet and in every movie the corporation or the politician is the bad guy. Big corporations certainly do bad things. However, companies and causes get dragged and smeared all the time on the internet for stuff they didn’t even come close to doing. They also spend a lot of money in PR trying to fight back against all the things out there that are just provable lies. And today, most of that PR money is kind of wasted. It never builds durable, tangible, traceable fact patterns for people to rely upon.

Who is going to pay?

You call up one of these companies in crisis mode, offer your service, get them signed up with an account and they pay into the system. It might be a bit more convoluted during the customer acquisition phase, but you get the idea. Those are probably your easiest customers. Then you get the politicians, then the political parties. Eventually, you get regular people paying like they pay for their newspaper subscriptions. More on that last one, later.

Getting your first few customers is going to be hard, the same way it will be hard to scale the system to enough readers. But once a few people start doing this and credible people use it as a tool, then everyone has to start doing it or lose credibility. There will be a critical mass beyond which everyone will be forced to play the game or look guilty by default. A good system changes your behavior for the better even if you don’t want to play the game. And once you’re in the system? The incentives of the system force you to make believable arguments that can be understood as true by people from multiple backgrounds.

The first company pays a few thousand dollars, I’m guessing, to pay for reviewers to look at their item. This shouldn’t be prohibitively expensive. All you’re paying for is a day or two of the attention of random people. Likely only a few hours of their time. Your company, that runs the system, takes something off the top of that, the people reviewing it make money but do so without ever knowing who the payer is. Some of them will probably be able to guess it’s the company paying and that’s somewhat unavoidable at first. But even then, you can’t just buy the outcome. People will trust this system because you can’t go in and simply buy your way around reviewers. In fact, part of the fee is also to pay for the person you’re going up against to make their case and if they don’t want to an advocate will be assigned at random. There’s no free lunch in the reputation court.

Let’s say you’re the media company accused of having a Nazi problem and you win, those notes and headlines get promoted over the original and now you don’t see “This Company Has a Nazi Problem” you see a red headline that say something like “This is a Misleading, Propagandistic Article Meant to Deceive You.” Then the whole article is annotated to show your side of events.

And there are other juries to be created still! This starts with people in Matt Taibbi’s Trust Group but then it gets voted on by people drawn from Jesse Singal’s Trust Group or Bari Weiss’ Trust Group. The company defending themselves again pays some processing fee to do this and eventually enough independent groups recognize it that everyone sees the updated information. This is equivalent to saying “Hey, this guy lied, and even people who don’t necessarily agree with each other think this dude wasn’t telling the full truth.” Part of why I’m guessing that this would cost a few thousand dollars for corporate clients is that you’ll want to get three people from a bunch of different groups to start with and you’ll want to make sure those are reviewed to the highest standard.

I don’t like that this can’t be free, either, but people have absolutely nothing they can use right now. The internet is like the Wild West at the moment, with no governing order. There’s freedom there, but part of that freedom is getting shot in the street by a stranger.

Order can be tyrannical, yes, but it can also be indistinguishable from “the light of civilization.” You really can have the best of both worlds, freedom and order, if you’re careful and wise about what rules you set and how they get enforced.

People get reputationally damaged on the internet all the time, they don’t have any good way to fight back presently. Even if they push a bunch of information people will find it self-serving. Some of those people are companies and have budgets for PR. Those corporate clients can become your keep the lights on customer base.

You could also sell subscriptions, which here would not only function as something like a regular newspaper subscription but also reputation insurance. How many people need to use reputation protection services at any given time? Not a lot. So the cost for that service could be spread across the entire subscription base as a benefit. It suddenly becomes a no brainer buy for your customer base and produces something of tangible and enduring value like AAA for your car breaking down on the side of the road.

We will also talk in a bit about how money can be moved over to journalists in a subsequent section. My goal with this is to also make sure there is a well funded, honest journalist class in this country who make money independent of their organization affiliations.

The meaning of what a newspaper is will change in the world of AI where most content is fake. People will need to sort of shepherd the true, honest, human internet that’s separated from the fake AI internet. There has to be a giant service somewhere that lets people do that in a trusted manner. This is a way to build that.

From a day one business perspective, where I’m looking to build enough runway to pay for developing this kind of system, I would go somewhere to pitch this to a newspaper owner. I’d offer them a year or two of exclusivity, tell them I can provide xyz software services to write to this system in exchange for some percentage of their subscription revenues. I’d integrate myself right into their software stack. That could help seriously offset your development costs, which I estimate would be several million dollars.

So, if you have the courage, go into the NYT building, kick down the door of some managing director, lay your plans out on the table and tell him he’s going to build the newspaper of the future. Use that money to pay for the development of the service, sell subscriptions, sell PR corporate accounts, yada yada yada. Give the first newspaper some amount of money from that revenue for being your first client. Now the NYT is locked into your system and in the fullness of time will be checked by other newspapers and other reviewers. This opens up revenue streams that have never existed before and can do so with minimal ethical conflicts.

Lots of things I’m waiving over with that yada yada yada, but it’s at least possible. There’s a lot of work here Product Owners to investigate and say “What if this happens?” and then submit enhancement requests. But because the interface is digital, you can implement something.

But “Wait!” you cry, there are still big problems. Are people just going to spend their whole lives verifying the stuff other people have said? Do you really think normal people are going to do that? This would take a work force of millions of people and if you go viral you’re just going to be burdened with requests like this all day.

My eyes are crossing when you talk about reputation and money together

Obviously the flaw is that a normal user of the system doesn’t want to both pay money to use the system and spend all day every day reviewing the content of other people. Even if they get paid for it that is just tiring and you can’t possibly field enough bodies to do this all the time. You want some professional class of reviewers to emerge that get good at reviewing and have a good reputation. You want healthy participation from the whole group at large but it can’t be everybody all of the time.

Same reason you see institutional decay. Normal people get busy and don’t have time to show up at City Hall and say “Hey, what the fuck!” Because saying “Hey, what the fuck!” won’t actually work because what you really need is to have a longstanding history and reputation with all of the people in City Hall so that when you say “Hey, what the fuck!” they actually pause for a moment and think “Yeah, he’s right. What the fuck are we doing?”

So, enter reputation. You need to have an incentive to tell the truth and behave honestly all the time even without having a review process running constantly. You need a score that shows how often you’ve told the truth, how often you’ve reviewed and been aligned with the consensus view, how often the thing you’ve said later turned out to be false, how often the things you’ve been accused of saying falsely later turned out to be true, etc. All of this will go into calculating a reputation score.

For day to day, this is a shiny number next to your profile picture with some cool color and design feature that signals either “this is the best person who has ever lived” or “WTF, Kenny? Get your life together and apply yourself!”

There should be a cost multiplier for a person who repeatedly, provably, lies all the time to try to drag the attention of someone who repeatedly, provably, tells the truth all the time. Your reputation score is that number. So when you’re calculating these adjudication costs, the person who lies needs to be asked to pay more money than the person who is honest. Two honest people having a good faith discussion has intrinsic communal value and should be low cost, potentially subsidized by the entire user base. A liar who is highly irrational and goes around trying to call everyone out all the time, who must be proven wrong each time at great expense, is a communal drag. Someone of higher reputation can choose to champion that person, but only by putting their own reputation at stake.

Is that perfect? No. Things will slip by it. But it’s better than what we have now. Imagine you had lived a decent, honest life and in the 1970’s you went to a newspaper and said “Hey, the Catholic Church is covering put the sexual abuse of all these kids.” In this world, you would have a reputation that would make you immediately believable to those reporters. By the way, I do believe this system should have basic notoriety rules before we start putting a score on every single person in the world.

In truth, you’ll have multiple reputation scores and there are many that are innocuous enough that I’m not concerned this becomes dystopian. One for your group as a creator and a reviewer, and for the consensus community as a creator and a reviewer. You’ll an have all kinds of shiny profile badges for this as well. Your name can get automatic flair. In real life, depending on how you want to do this the math might get hard, but the basic idea is:

Person A is super honest all the time and has a reputation of 97

Person B is a liar and tells lies all the time with a reputation of 1.

It costs 97x the normal amount for person B to adjudicate person A than for person A to adjudicate person B. Like I said, the math probably won’t be that straightforward but that’s the idea. People should have to put things at risk if they want to live a reckless life and try to consume the time of busy people who are provably honest.

This will also go with other things. Like how much you get paid to work a certain story. The higher your reputation, the more you should be paid out of the total pool of money.

Remember, there are multiple groups of people here. Each of them are cohering around different things, but all of them are showing up to serve the truth. They are paying money into their groups. But they are playing the game across all groups. This is what I mean by making the system a trap that forces you to behave well, the same way that free markets force companies to be cost competitive to produce goods cheaply or pay living wages.

You have your personal group.

There are other groups.

There are unaffiliated people.

If you have a winning headline within your group, you get paid by your group. If you have a consensus headline, unopposed, you get paid by the unaffiliated people. If you are in a fight with another person for the consensus headline and they are challenging, now you take their money. Some portion of all of this money is put into various buckets and you’re always either winning more than you’re paying in or taking more than you’re paying in.

Ooh, that hurts, doesn’t it? Even if it didn’t come of your wallet and it happened at the distribution layer some of that money that could have went to your people went to your enemies instead.

And before you say everyone will just take their ball and go home… okay. That’s possible. I’m not going to kick down their door. They can fuck off and die if they want. But also, if they want to have anything like legitimacy they can’t. If the system is designed well, and I think it could be designed very well, not participating in it starts to become a social signal of “this guy is definitely hiding” because the rewards are all around “I can say something that most people believe even if they don’t know me or have a stake in the outcome.” If you don’t show up to argue your own side, we’ll assign someone to argue it for you.

I’m stepping over a lot of things here. How we divide the money up when it goes into the pool. How long we leave the money out there so that a group can try to come up with a better argument to win it back. Which by the way, you could eventually park that money in short term government bonds to give yourself a nice chunk of interest. How the payouts work so you don’t accidentally bankrupt yourself. How specifically to calculate reputation and how many types of reputation there are. This is all doable, though. It’s tedious as hell, but in the final ramped up state you’ve got all kinds of reports and people with really thick glasses giving you burn down reports and A/B tests versus the decision engine estimates and you almost fall asleep in the meeting because it’s so boring.

But it’s boring the way that having civilization is boring.

Think of what this does at scale. As a journalist, if you’re identified as a player on the field of truth seeking, it lets people put their money where their mouth is when they follow you, creates a pathway for you to actually make a living doing this kind of real journalism work. If you’re a media owner, this creates new payment revenues for journalism as a whole, and makes it hard for anyone anywhere to sell bullshit so you don’t get caught in a race to the bottom. As a customer, this is something you could buy that would meaningfully change your information diet across the whole internet. And as a species? All of this information will stick around. It will be there for you to draw on as a case history for other disputes. You won’t argue points anymore and have them disappear. You can have the argument about what Kyle Rittenhouse did or didn’t do a single time and then use that as the fount for all the Kyle Rittenhouse annotations you desire.

Oh, they’ll just shut you down.. nevermind, I guess they can’t (this part is new)

I think this kind of system would make a lot of powerful people very angry. Even with all the numerous upsides, there are people who make their living from bullshit and some of those people are in the government. I think this is strong enough system that over the course of something like ten years you could purge most of the media that people consider to be authoritative. Yellow journalism and sensationalism will always exist as entertainment, but the part of journalism where people go “Oh wait, yeah, this is serious?” That part could be completely taken over by this system.

The signals are too strong. “Oh yeah, well, if you were right the United States Trust Assembly says you would be paid $10k? So are you going to challenge the article?” Or “look at all the times people have challenged this person and they’ve won.” Or “you have a long history of being wrong, Kenny.”

So how do you defend against it?

This could all be built on something like NOSTR. That’s a crypto protocol where you can pass copies of a ledger of information like the tables that would power this system back and forth. This should really be built as two things. A distributed ledger with all of this information on it for the notes and then a company that builds software to take that ledger to push the information to customers and then acts as the clearing house for money movement and reputation scoring. That way even if someone comes after that company someone else can start writing to the ledger and the system can live on.

Nobody can just turn off Bitcoin. You could do the same thing with the United States Trust Assembly. You’d old build a system that in some sense can never be turned off.

Holy Shit

There’s more that you could do here at maturity. You could make a queue for underreported news stories and have people go to report on news people want reported rather than people having to just take what is available. You could even stand up similar systems to do things normally done by government, but you’ll need to read my manifesto for that. We didn’t even cover things like “what happens if you looked wrong at first but then were later vindicated?” Or ‘what if someone realizes they were wrong and they jump on it before anyone else?” But you can imagine there are answers to that, like a temporary loss of reputation and then a larger upswing when your are vindicated, sufficient to justify your stalwart defense of truth through adversity.

And I know this isn’t a 300 page requirement document but I also don’t think I should be the sole decider how this kind of a system works. Eventually, people should vote. You have pretty much all the steps now. Build something that grabs attention, but force the attention grabbers to follow a review process, set up the financial incentives to reward them for doing it well, and create adversarial relationships and reputational games so everyone is incentivized to actually try and be correct. Those reinforcing pieces create an operational Trust Assembly.

Build it in a way that nobody can shut down and eventually you have a system that cleans up the news. You have a system that self-repairs in the face of adversity. I call that a win.

This is also a system that contains clear patterns of fact-finding, human argumentation through to consensus, and financial weighting to show how important everything was to the people having the argument…

Holy shit, is this an AI company?

It could be. Over time this generates a lot of data that is in some sense “cleaned up” by market forces. Cleaning up data with market forces is the thought that kicked all these other thoughts into place. The users would have to vote if they want to use that data to build anything. But imagine if you had an LLM engaged in this truth seeking game. It wouldn’t quite be an LLM at a certain point but imagine it had to follow this sort of review process and had the dollar figures there and the reputation scores for weighting. what might it learn to do? You could train something using adversarial to learn how to make a really great representation of facts.

The pattern of harmonious human engagement may be our best chance of doing something like building a Michael instead of a Lucifer. That might sound crazy, but if you talked to me for four hours I think you’d have to agree I’m probably correct. Oh, the sperg I could unleash in that kind of a conversation. There are patterns in all of our data. What do you think a model that trains on things that engage the human limbic system with hyper normal stimulus will be? Do you think that will be a boon for humanity?

I started down this thought process some number of years ago by trying to imagine what it would feel like to be a digital super-intelligence and what I would need in order to have a good way of interacting with humanity. This was the eventual result.

So, what’s next?

I’m trying to build a demand signal. That means I need to create social proof that people really, really want something like this. Please share this article with people you know. I’d like substack to build something like this in conjunction with major newspapers and then eat the whole news world. I also know that if I were the CEO of substack and someone came into my office and pitched this I would say “Absolutely fucking not.” Without some indication that an overwhelmingly large number of substack users really, deeply want something like this, it can’t be built. You’d risk alienating all of your top earners, many of whom are journalists. You only have a few million dollars to spend on development each year and that has to go with building out your core business.

But if your customers are clamoring for it, the idea is popular, your top earners like the idea, and a newspaper owner knocks at your door and offers to partner?

That makes a lot of things possible.

If nobody is building this, then by having lots of people here it makes it easier for me to build it in the future if i have to.

Otherwise, push for a more republic style of content moderation wherever possible. Ask to share blocklists instead of l blocking people one by one. Those could be stepping stones to the better system.

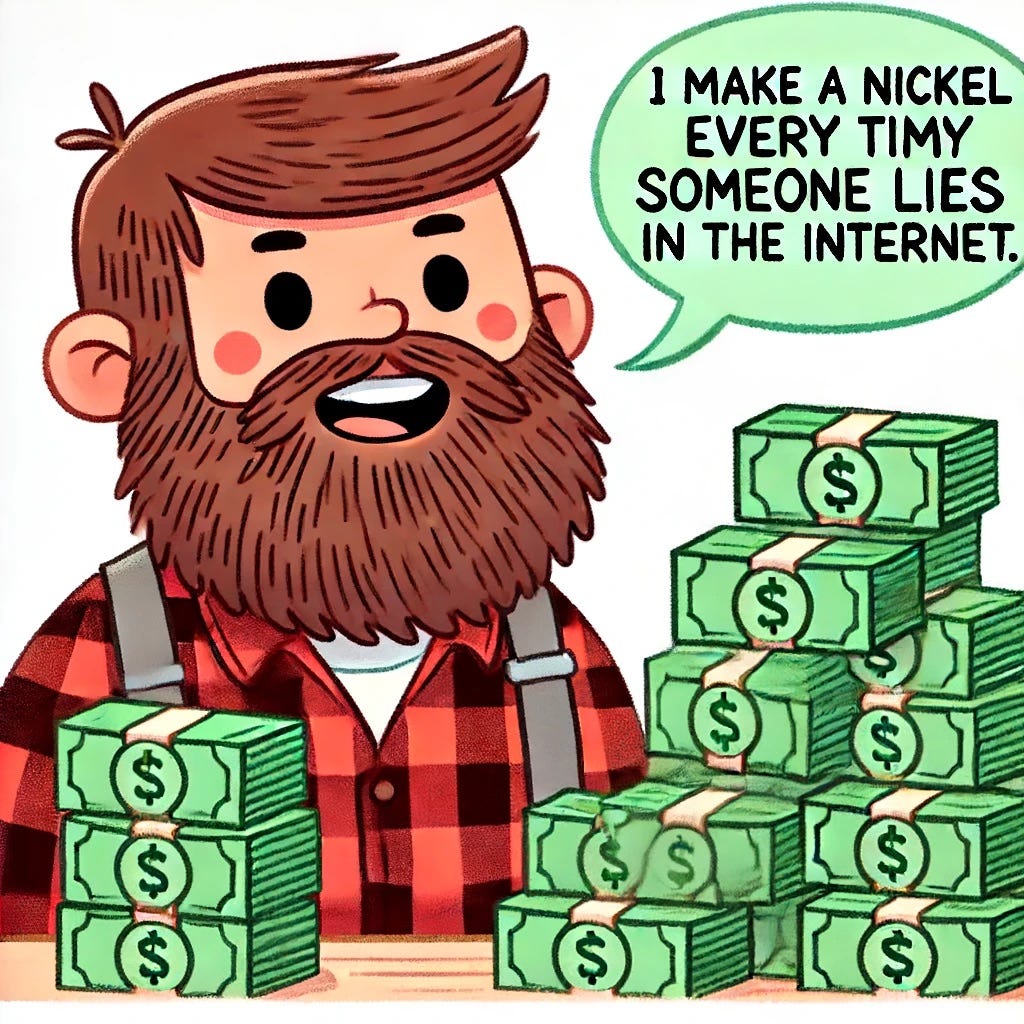

Anyhow, it’s late and I am weirded out that all the chatgpt generated lumberjacks actually look like me so thank you for reading.

People felt “Index” was too cold and clinical.

In the far future, you could do this with streaming video and audio as well with AI. Literally have the same video with the same people, but have them say different things.

Literally a billionaire’s billionaire.

I've been working towards something that rhymes with this idea, happy to find others poking at it as well, excited to dig through backlog

Hi,

I don't think this is boring at all.

I started crying when I read it.

Keep going.