No One is an LLM Implementation Expert

The Courage to Actually Think, First Principles Reasoning, a Glimpse of Things to Come

I sometimes feel like I’m speaking out of both sides of my mouth when it comes to AI. Yes, I agree with Gary Marcus, Freddie DeBoer, and Dwarkesh that LLM’s are missing core capabilities to deliver some of the wildest promises made by AI companies. You can’t just plug these things into a company and generate immediate returns through productivity gains. However, in my secret heart there are parts of me that sneak glimpses around the corners of time and hold beliefs that I think would embarrass Yudkowsky.

The reason I feel this way is definitional. We already have “general intelligence.” It’s just that I never held “general intelligence” to mean “god like.” There’s all this other trickier motivational psychology stuff that has to go on top before general intelligence is useful. Modern AI is mayfly intelligence. Someone helps you do something and then dies before he can learn anything to help you do it better the second time. You can see what I mean if you imagine AI as a person who has been brain damaged to the level of a large language model. How useful can a guy really be if his memory resets on every interaction and his core personality is a sort of mind-flute that will play notes all over the place while trying to accomplish a task?

There are think pieces about this all over the place almost gleefully expecting the core labs that create LLM’s to explode.

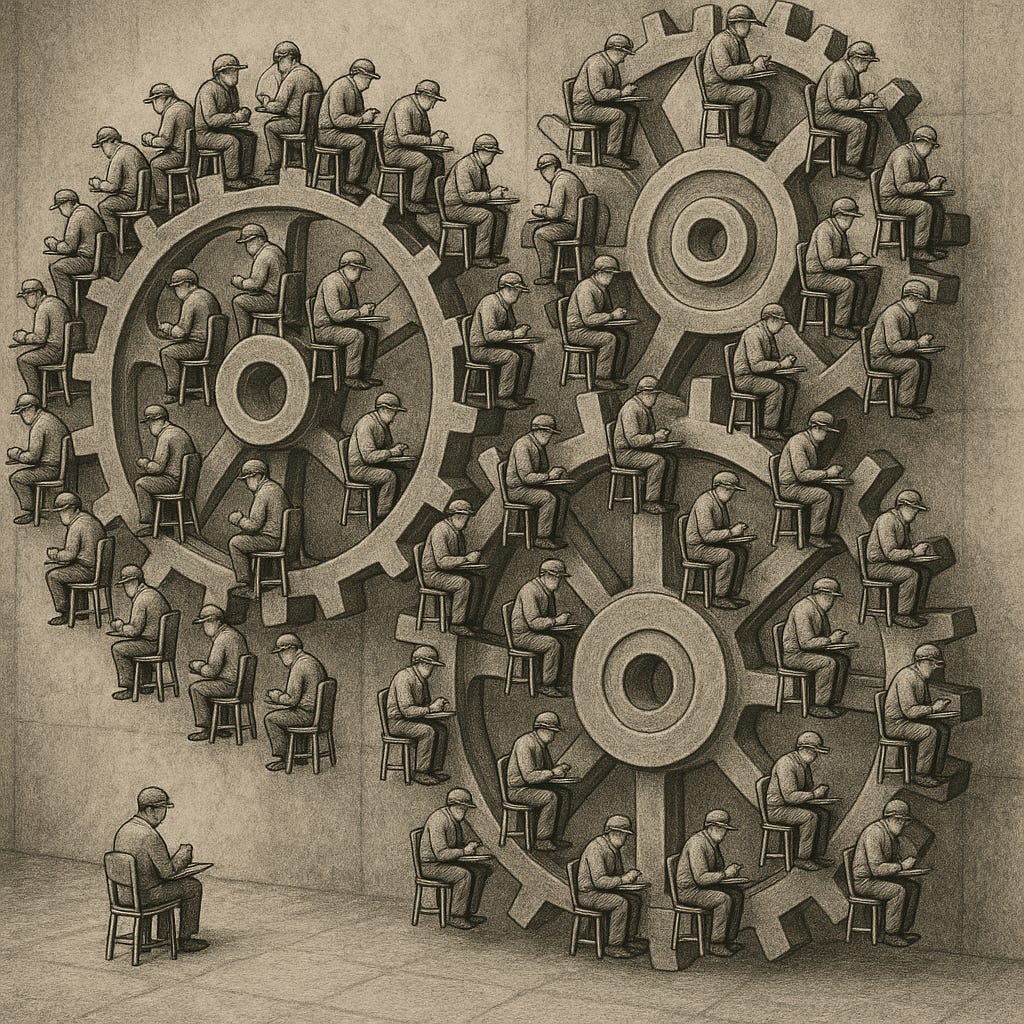

I’m tempted to believe this myself. LLM’s lie all the time. Is it really economically valuable to have a million guys show up at your work with no short term memory, a hazy relationship with the truth, even if they promise to work for free? What are you going to do with those million guys that’s productive?

This is the exact place people give up…

But if you’re a good manager and you can get a million people to work for free, isn’t it worth thinking really hard to imagine ways you could build your workflows around their disabilities? Say you built your tasks in such a way that it was dead simple for someone who couldn’t remember things long term. And you could do a check on their work automatically so you were only using their judgement to string together very small chunks of work? At a certain price point you’d be willing to patch on a lot of bubble gum and duct tape to make this kind of thing hand together.

As it happens, I’m leading a major AI implementation project and inside I was groaning at how to make the thing actually work. There are people who know lots and lots about how to grow an AI model but there aren’t a lot of people who are implementing these things in profitable ways. That’s the entire point of those first few paragraphs! People have a legitimate point that today these things aren’t generating enormous returns on investment. What are the odds that I’m going to find One Dumb Trick to make them function in a way that is economically positive for my company?

As it turns out, pretty good.

Keep reading with a 7-day free trial

Subscribe to Extelligence to keep reading this post and get 7 days of free access to the full post archives.